Citizen Science, Experts, and Expertise.

Corresponding author Email: jordanre@msu.edu

DOI: http://dx.doi.org/10.12944/CWE.16.2.05

Copy the following to cite this article:

Jordan R, Sorensen A, Gray S. Citizen Science, Experts, and Expertise. Curr World Environ 2021;16(2). DOI:http://dx.doi.org/10.12944/CWE.16.2.05

Copy the following to cite this URL:

Jordan R, Sorensen A, Gray S. Citizen Science, Experts, and Expertise. Curr World Environ 2021;16(2). Available From: https://bit.ly/3gPy40a

Download article (pdf) Citation Manager Publish History

Select type of program for download

| Endnote EndNote format (Mac & Win) | |

| Reference Manager Ris format (Win only) | |

| Procite Ris format (Win only) | |

| Medlars Format | |

| RefWorks Format RefWorks format (Mac & Win) | |

| BibTex Format BibTex format (Mac & Win) |

Article Publishing History

| Received: | 08-07-2021 |

|---|---|

| Accepted: | 30-08-2021 |

| Reviewed by: |

Gaurav Dhawan

Gaurav Dhawan

|

| Second Review by: |

Prabin Shrestha

Prabin Shrestha

|

| Final Approval by: | Dr. Umesh Chandra Kulshrestha |

Introduction

Citizen science includes the field of public participation in scientific research and has drawn the attention of environmental researchers and public alike. The involvement of the general public in research has resulted in a greater invitation for public audiences to participate in critical conversations about science, health, and environmental wellness (to name a few domains) and has generated interest among researchers, within international funding agencies, at museums, in science centers, and among social scientists (e.g., 1; 2). While others have posited different definitions of citizen science, we borrow from 3, with specific focus on scientific inquiry, “partnerships between those involved with science and the public in which authentic data are collected, shared, and analyzed.” Citizen science, therefore, relies on cooperation between a range ofscientific experts and non-experts. Understanding this partnership can call into question the notion of expertise as it pertains to these experts and members of the public.

While it may seem clear that those who engage professional environmental science might consider themselves to have greater than lay knowledge and skills, what constitutes the transition between expert and lay persons has been the topic of significant scholarly study (e.g.,4). 5 loosely identifies knowers tasked with epistemic work as being those with whom expertise or credibility is attributed. With this definition, however, there is a reliance on trust of the expert (e.g., 6), or at least as 5 describe a trust in the peer review process. Deference to,and therefore trust of, experts has become a necessary function for individuals to make informed decisions in the face of the multiple decisions made in a single day (e.g., see for food and environment 7). Such information, however, does not come easy because experts often do not agree (e.g., environmental policy; 8), and the extent to which decision-makers weigh different expert recommendations is not stable (e.g., 9). A gap in the literature exists, however, to help us address the question of whether experience working with environmental experts in citizen science aid individuals in gaining scientific expertise?

In several contexts, experts have been shown to know more, have discrete knowledge accessing strategies, and have their knowledge better organized in their minds when compared to novices (e.g., 10). Furthermore, when considering the pathway to expertise, it is clear that it is not simply time spent with ideas or problems that define experts (e.g., 11). These authors describe the value of time spent in deliberative practice where tasks are improved with appropriate repetition and feedback that results in improved progressive problem-solving 11. While many citizen science projects require different levels of expertise, many projects have the goal of aiding members of the public in the development of environmental monitoring skills. The pathway to such reasoning skills, in environmental citizen science, is not well studied.

Certainly, citizen scientists engage with scientific experts differently depending on the type of project in which they engage (see 1 for more). In collaborative or co-created projects, individuals engage with experts as knowledge co-designers and therefore participate in collegial type discussions versus existing as receivers of knowledge. For us, it stood to reason that having experts present to help provide progressive feedback to our citizen scientists (aka novices in thiscontext) would help these individuals gain expertise in scientific problem-solving. Our experiences, however, support this notion in that we anecdotally noted that the discussion may be different when citizen scientists are problem-solving without an expert in the room (Jordan and Sorensen personal observations). Given this, we chose to determine the conversational elements and extent to which environmentally oriented citizen scientists differed in their discussions with and without the presence of an environmental scientist who had expertise in the focal area. In doing so, we hoped to determine what ways an expert might enhance or limit citizen science study of a particular environmental issue.

Here we chose to review transcripts from four co-created type citizen science projects; two where experts were present and two where experts were not. We used a semi-deductive coding scheme to determine the extent to which the discussions differed. After sharing these results, we provide in the discussion implications for future practice in this type of citizen science project.

Project Background

Collaborative Science.org (a co-created type of citizen science).12 define co-created citizen science as projects where members of the public and (often but not always) scientist design projects where at least some of the project participants are engaged in almost all aspects of the scientific enterprise. CollaborativeScience.org was designed to be a co-created project intended to help engage individuals with modeling technology to conduct locally based, but regionally connected, natural resource stewardship projects. Using a series of web-based modeling and social media tools community members were aided in conducting authentic science. This included making field observations, engaging in collaborative discussions, graphically representing data, and modeling ecological systems. The goal of these efforts was to enable volunteers to engage in resource and open-space conservation and management.

Methods

To determine how two sets of discussions differed, we took transcripts from previous CollaborativeScience.org studies. We chose to focus on those discussions where the design and implication of the research was most discussed. These were steps 4 and 5 in table 1.

Transcripts were drawn from four Collaborative Science.org projects:

2 projects had an expert present

- Invasive Species control study. These individuals came together over concerns regarding and invasive plant species spread in an open space of shared concern.

- Storm water measurement study. These individuals came together to measure bank erosion on a stream of shared interest before and after heavy rainfall events.

2 projects did not have an expert present:

- Bird nest parasite control study. These individuals came together to design and implement a study that would reduce the amount of nest parasitism on a bird species of shared concern.

- Drinking Water Quality Concern study. These individuals came together over concerns regarding environmental impact on water quality and in particular the presence or absence of common pollutants.

For all four studies, the individuals already knew each other and were working on conservation issues. Some had training through their statewide Cooperative Extension led naturalist programs (e.g., Master Naturalists, Environmental Stewards, etc.). Each group had one member who responded to the call to join CollaborativeScience.org (which had associated grant funds for project development up to $2000). Stipulations for participation was that the group had to participate in at least 4 audio-recorded, facilitator led, collaborative modeling exercises. The facilitator was one of the authors (with another one of the authors handling the recording). None of the authors were content experts. All participants consented to participation in this study following necessary human subjects research disclosures.

Methods

For this project, we used the MentalModeler (www.mentalmodeler.com) collaborative modeling software. This software allows users to evaluate relationships between structural (i.e., the parts) and functional (i.e., the mechanisms that drive the outcome) aspects of a concept map that they create. The concept mapping interface allows learners to first brainstorm variables/components that are important to the system being modeled. It also allows learners to define "fuzzy" relationships (including feedbacks) between variables (strong positive to strong negative which are converted to values between +1 and -1). After the initial structure of the model is defined and qualified in the concept mapping interface, the concept map can be viewed in matrix format in the matrix interface, allowing the network structure of the model to be evaluated and exported to other analysis and visualization tools such as excel. The scenario interface allows learners to evaluate system state responses (what increases/decreases and to what relative degree) under 'what if' scenarios by increasing or decreasing any variable (or multiple variables) in the concept map.

Table 1: Structure of CollaborativeScience.org (in bold are the discussions transcribed for this current study).

Step 1: Respond to call and name at least 6 members of the new group.

Step 2: Attend a virtual or in person information session (depending on preference and location; note group must be entirely in person or online no hybrid groups were facilitated). If online the group stayed online and if in person, the group stayed in person.

Step 3: Answer questions from an online “pre” survey and complete an individual model using mentalmodeler.org. Send models to facilitator to “merge” the model terms that will be refined during the next discussion.

Step 4: Attend a second session where the model terms are discussed and refined collaboratively.

Step 5: Attend a third session where the model arrows are drawn, and relationships are defined. At the end of this session and based on the model, a research question was defined, and data collection procedures discussed (and refined over the following weeks via emails and short conversations).

Step 6: Data collection period and data analysis and results compilation.

Step 7: Attend a third session to refine model and discuss results. Participants complete an online post-survey.

Step 8: Conclusions discussed, and presentation plans made.

Data Analysis

For this study, we compared the first and second group modeling discussions (steps 4 and 5) for each of the four projects described above. During project development (see 13), discussions were audio recorded and then transcribed by one of the authors. To gather evidence for this paper, all authors read the transcripts and used a semi-inductive coding scheme where major themes were identified by all authors and compared during data discussion periods. During this research discussion, themes were listed, redundancy removed, and the remaining themes served as base codes, which were refined with subsequent reading of all transcripts. These refined codes were discussed until all authors attained agreement. See table 2.

Table 2:

|

Category |

Code |

Description |

Example |

|

Question |

|

|

|

|

|

Seeking new information |

This is a question where the answer is clearly unknown. |

Participant: Do we really know what happens when a particular male is eliminated from the box. Do we know that three more males do not replace him, or another...? |

|

|

Reaffirming information |

This is a question where the answer may be known and the answer in many ways is a re-affirmation. |

Participant 1: Plot size, we're thinking the bigger the plot size, the better the restoration effort or what do you think? Participant 2: Yeah, that is my model currently… |

|

Sharing |

|

|

|

|

|

New claim introduced without question |

This is an idea or statement of fact that is new and not introduced as a response to a question. |

Participant: Now if you look at the eradication effort, that is where we have the pulling and the herbicide. So do we need to say eradication? Maybe we do because the effort is a big deal that gets related to the people. So pulling and the herbicide. Those are both the eradication. The people element, we have the visitors coming to the monument. We have local groups associated with the monument, neighbors of the monument. People we can convince to pull. so I think the eradication effort is really going to affect some of that. |

|

|

New claim introduced with question |

This is an idea or statement of fact that is new but introduced in response to another’s question. |

Participant 1: Do we really know what happens when a particular male is eliminated from the box. Do we know that three more males do not replace him, or another...? Participant 2: If it were to work, which it might not, cause some of them will reject the eggs, but if it were to work, it could keep sparrows on a failed attempt, as opposed to if that box was vacated, … |

|

Counter |

|

|

|

|

|

Claim in counter to information |

This is a claim that that is given in direct counter to another’s idea or claim. |

Participant 1: is that point source? Participant 2: all the rainwater that falls…so I think point source would capture rain water Expert: Rain water is not point source Participant 2: well… Expert: Only point sources you can identify the sources rain water wouldn’t…it's what leads to nonpoint source pollution |

|

Collaborate |

|

|

|

|

|

Claims built in collaboration. |

This is a series of claims being added to claims by multiple members without topical change or question. This is akin to co-creation. |

Participant 1: do we think there is a link between ecosystem health and water quality? Participant 2: I would think the healthier ecosystem the better you water quality is going to be, but that might be more your source water quality as opposed to your end point water quality. Participant 1: so that is something we need to tease apart. Because what we originally talked about and cared about what the end point water quality... Participant 2: you could go from ecosystem health up to source up in the top left. Participant 1: I see what you are saying. So maybe some of these things we have going to here, should go to the source? Like oil pollution, it impacts water quality but it impacts it by impacting the source. Participant 3: Umm Hmm. Participant 4: so that water quality represents the actual potable water quality, like the end of it? I think that is what you suggested. Participant 5: I think it would make more sense to piece it out. Participant 3: that is what I was thinking. Participant 5: the end point potable water, and then the source that goes into it. Participant 2: Yea |

Once the coding scheme was created. Each turn by a speaker was only coded once based on the dominant theme. Using an Excel spreadsheet, speaker turns were separated. The “important” discourse was highlighted (i.e., all ah’s, ums, indecipherable words, and side conversation (e.g., talking on cell phone, etc.) were not highlighted). From there operational questions (e.g., “which day are we meeting” “how far off the road is the stream?”, etc. and logistic type) were removed, and the salient features of learning (i.e., knowledge orientation type; main words that justify the code in table 2) were underlined. One of the codes was assigned. Because number of turns varied by conversation, the results are presented in terms of percentages. Given that our goal was to simply compare patterns between the two discussions in terms of the major themes listed above and that overall numbers of some code types were low, we decided to use visual inspection of the data to interpret results.

Results

On average conversations took up an hour with anywhere from 9-13 conversational turns (i.e., a new topic was introduced). Our results indicated that the discussions differed in in the extent to which operational ideas were discussed. Further, the conversations ranged from 98% to 67% on knowledge orientation (see table 2) versus operational clarification (i.e., discussing logistics of the meeting and various tasks associated with meetings).

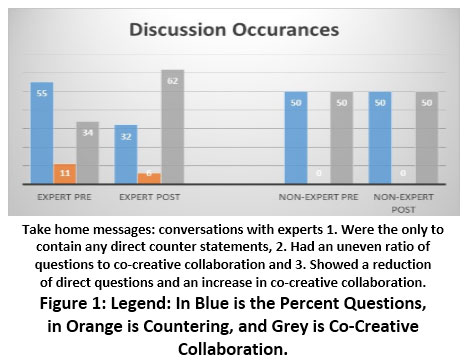

After we removed the extraneous and logistic type operational questions, we found differences in the conversations containing experts (n=4, 2 pre and 2 post) and those that did not (n=4, 2 pre and 2 post). Conversations without experts did not contain any direct counter statements (Figure 1). Further we noted differences in the ratio of questions to co-creative collaboration. A reduction of direct questions and an increase in co-creative collaboration was seen in the two group groups that contained experts in the post discussion, whereas no difference was noted in the non-expert discussions where the ratio remained one to one.

|

Figure 1: Legend: In Blue is the Percent Questions, in Orange is Countering, and Grey is Co-Creative Collaboration. Click here to view Figure |

Discussion

In summary, we found that a large portion of the discussions focused on knowledge building types of conversation (as opposed to logistic/operation type and extraneous type). In addition, we noted that only experts provided challenges to ideas being discussed. Subsequently to these challenges, however, a greater proportion of knowledge co-creation occurred (i.e., conversation 2). We noted no change to knowledge co-creation in conversations without the expert present. We discuss the implications of these findings below.

Given that we only have a small data set, we are unable to do more than speculate, but such speculations will help inform the design of future experiences for environmental citizen scientists. First, the experts were the only to provide direct counter. We speculate that a lack of confidence in one’s competence could have driven this lack of countering among the non-experts; as this has been found in other expert/novice type studies (e.g., 14).

More intriguing, however, was that co-creation occurred at a much higher rate in the second discussion compared to the lack of co-creation with the non-expert groups. Was there something prompted by the experts that proved more generative than without experts? We, post-hoc, went back to the transcripts and focused entirely on the nature of the expert’s talk. One thing that became clear, acknowledging that this is only a sample size of two individuals, was that the experts spoke exclusively at an abstract level while the volunteers tended to do both. This means that experts almost always spoke about the phenomenon of interest outside of the specific context and tended to use the terms “like” or “similar” to refer to that idea at other points in the mode (e.g., like we saw with X or similar to Y…). Certainly, data support the notion that experts tend to use abstraction or generalization to transfer ideas from one context to another 15. Does this broader framing result in more generative discussion? In our previous work with primary, secondary, and post-secondary learners, we found that broadening novice’s frame to a more global context resulted in more creative thinking 16. Further, such framing helped struggling learners to develop more accurate mental models 17.

Again, we note our data are limited. First, the study was designed with a different intent beyond measuring expert and non-expert type discussions. Instead, our inspection was opportunistic based on what the authors noted while participating. Second, all authors participated in the study as facilitators, and therefore run the risk of over speculating as to data meaning because each had a broader context of the work. While efforts were made to minimize this bias, the risk remains. Finally, the sample size was quite small. The consistency between the four groups suggests further study, but clearly more data are warranted to determine if the effect difference remains. Nonetheless, as a pilot study, this paper offers a discussion of a plausible phenomenon that warrants consideration in future environmental citizen science projects.

Several future directions follow this pilot investigation. We found that experts did not ask very many questions pre to post. Because countering was also low, could there be a trade- off between co-creation and question asking? Did participants ask more questions because the expert was present and did that set the condition for co-creation? It could also be possible that participants were feeling shier about co-creating initially when experts are present; although some studies have shown a certain comfort level with experts present (e.g., 18).

Implications and Conclusions

Clearly more data with respect to the role of experts in discussions with citizen scientists are warranted.If citizen science is to be seen as a viable approach to collecting data about and management of environmental systems, it would do us well to understand the role of experts and in particular the extent to which they need to be involved in various aspects of study design, data collection, and data interpretation/dissemination. This question is particularly important as the presence of experts in local or regional systems may be limited. Future direction includes direct manipulation of expert knowledge as is engaged with citizen scientists.

Acknowledgments

The authors would like to thank the many citizen scientists who have participated in this and other projects over the years. In addition, two anonymous reviewers are acknowledged for their work in improving this draft. Finally, the work here was supported by a US National Science Foundation Grant (IIS-1227550).

References

- Shirk, J. L., Ballard, H. L., Wilderman, C. C., Phillips, T., Wiggins, A., Jordan, R., ... & Bonney, R. (2012). Public participation in scientific research: a framework for deliberate design. Ecology and society, 17(2).

CrossRef - Jordan, R., Crall, A., Gray, S., Phillips, T., & Mellor, D. (2015). Citizen science as a distinct field of inquiry. BioScience, 65(2), 208-211.

CrossRef - Jordan, R. C., Ballard, H. L., & Phillips, T. B. (2012). Key issues and new approaches for evaluating citizen?science learning outcomes. Frontiers in Ecology and the Environment, 10(6), 307-309.

CrossRef - Collins, H. M., & Evans, R. (2002). The third wave of science studies: Studies of expertise and experience. Social studies of science, 32(2), 235-296.

CrossRef - Bouchard, F. (2016). The roles of institutional trust and distrust in grounding rational deference to scientific expertise. Perspectives on Science, 24(5), 582-608.

CrossRef - Whyte, K. P., & Crease, R. P. (2010). Trust, expertise, and the philosophy of science. Synthese, 177(3), 411-425.

CrossRef - Wansink, B., &Sobal, J. (2007). Mindless eating: The 200 daily food decisions we overlook. Environment and Behavior, 39(1), 106-123.

CrossRef - Spruijt, P., Knol, A. B., Petersen, A. C., &Lebret, E. (2016). Differences in views of experts about their role in particulate matter policy advice: Empirical evidence from an international expert consultation. Environmental Science & Policy, 59, 44-52.

CrossRef - Miller, B. (2013). When is consensus knowledge based? Distinguishing shared knowledge from mere agreement. Synthese, 190(7), 1293-1316.

CrossRef - Persky, A. M., & Robinson, J. D. (2017). Moving from novice to expertise and its implications for instruction. American journal of pharmaceutical education, 81(9).

CrossRef - Anders Ericsson, K. (2008). Deliberate practice and acquisition of expert performance: a general overview. Academic emergency medicine, 15(11), 988-994.

CrossRef - Bonney, R., Ballard, H., Jordan, R., McCallie, E., Phillips, T., Shirk, J., &Wilderman, C. C. (2009). Public Participation in Scientific Research: Defining the Field and Assessing Its Potential for Informal Science Education. A CAISE Inquiry Group Report. Online Submission.

- Jordan, R., Gray, S., Sorensen, A., Newman, G., Mellor, D., Newman, G., ... &Crall, A. (2016). Studying citizen science through adaptive management and learning feedbacks as mechanisms for improving conservation. Conservation Biology, 30(3), 487-495.

CrossRef - Thériault, A., Gazzola, N., & Richardson, B. (2009). Feelings of incompetence in novice therapists: Consequences, coping, and correctives. Canadian Journal of Counselling and Psychotherapy, 43(2).

- Hinds, P. J., Patterson, M., & Pfeffer, J. (2001). Bothered by abstraction: The effect of expertise on knowledge transfer and subsequent novice performance. Journal of applied psychology, 86(6), 1232.

CrossRef - Jordan, R. C., Brooks, W. R., Hmelo-Silver, C., Eberbach, C., & Sinha, S. (2014). Balancing broad ideas with context: An evaluation of student accuracy in describing ecosystem processes after a system-level intervention. Journal of Biological Education, 48(2), 57-62.

CrossRef - Jordan, R. C., Sorensen, A. E., &Hmelo-Silver, C. (2014). A conceptual representation to support ecological systems learning. Natural Sciences Education, 43(1), 141-146.

CrossRef - McVey, M. H. (2008). Observations of expert communicators in immersive virtual worlds: implications for synchronous discussion. Alt-j, 16(3), 173-180.

CrossRef